Data Engineer Resume examples & templates

Copyable Data Engineer Resume examples

Ever wonder who's behind the scenes making sense of the tsunami of data that powers everything from your streaming recommendations to critical business decisions? That's where Data Engineers come in. We're the architects who build robust pipelines and data infrastructure that transform raw information into valuable insights. The field has exploded in recent years—with job postings for Data Engineers jumping 88% since 2019, outpacing nearly all other tech roles.

What makes this career particularly fascinating right now is the shift toward real-time data processing. Gone are the days when batch processing overnight was good enough; businesses now demand instantaneous insights. Many of us are working with tools like Apache Kafka, Spark Streaming, and cloud-native services to build systems that can handle millions of events per second. And with the rise of data mesh architectures, we're seeing responsibility for data quality moving closer to domain experts rather than centralized teams. As companies continue their digital transformation journeys, Data Engineers will remain at the forefront—building the foundations that make the data-driven world possible.

Junior Data Engineer Resume Example

Marcus Chen

Boston, MA | (617) 555-9812 | mchen94@gmail.com | linkedin.com/in/marcuschen94

Junior Data Engineer with 1+ year of experience supporting data infrastructure and ETL processes. Recently completed a Data Engineering bootcamp after pivoting from statistics background. Quick learner who’s comfortable writing SQL queries, building data pipelines, and working with cloud platforms. Looking to grow in a collaborative environment where I can develop more advanced data engineering skills.

EXPERIENCE

Junior Data Engineer – Vertex Analytics, Boston, MA (Jan 2023 – Present)

- Assist in maintaining PostgreSQL databases and troubleshoot data load failures, reducing pipeline errors by 17%

- Write and optimize SQL queries to extract and transform data for business intelligence dashboards

- Collaborate with 2 senior engineers to migrate on-premise data warehouse to AWS Redshift

- Built Python scripts to automate data quality checks, saving ~5 hours of manual work weekly

- Participate in code reviews and implement feedback to improve ETL process efficiency

Data Engineering Intern – TechFlow Solutions, Cambridge, MA (May 2022 – Dec 2022)

- Supported ETL pipeline development using Python, SQL, and Apache Airflow

- Created documentation for existing data pipelines to improve knowledge sharing

- Helped design and implement a data lake architecture using AWS S3 and Glue

- Wrote unit tests for data transformation functions to ensure data integrity

Research Assistant – Boston University Statistics Dept, Boston, MA (Sep 2021 – Apr 2022)

- Cleaned and prepared datasets for faculty research using R and Python

- Developed scripts to scrape and parse public data from government websites

- Assisted with statistical analysis and visualization of environmental research data

EDUCATION

DataCamp Data Engineering Career Track – Certification (Completed Dec 2022)

Boston University – B.S. in Statistics, Minor in Computer Science (May 2021)

- Relevant Coursework: Database Systems, Data Mining, Algorithm Design, Statistical Programming

- Senior Project: Built a predictive model for student retention using university database

SKILLS

- Programming: Python, SQL, bash scripting, Java (basic)

- Data Tools: PostgreSQL, MySQL, Apache Airflow, Kafka (beginner), dbt

- Cloud: AWS (S3, Redshift, Glue, Lambda), basic Azure knowledge

- ETL/ELT: Building data pipelines, data transformation, data quality testing

- Version Control: Git, GitHub

- Big Data: Basic Spark, working knowledge of data lake architecture

- Other: Docker, Linux, data modeling, API integration

PROJECTS

E-commerce Data Pipeline (GitHub: github.com/mchen94/ecommerce-pipeline)

- Built end-to-end data pipeline to extract data from a mock e-commerce API, transform using Python, and load into PostgreSQL

- Implemented scheduling with Airflow and containerized with Docker

COVID-19 Data Dashboard (GitHub: github.com/mchen94/covid-dashboard)

- Created ETL process to ingest daily COVID data from Johns Hopkins repository

- Built interactive dashboard using Streamlit to visualize trends and vaccination rates

Mid-level Data Engineer Resume Example

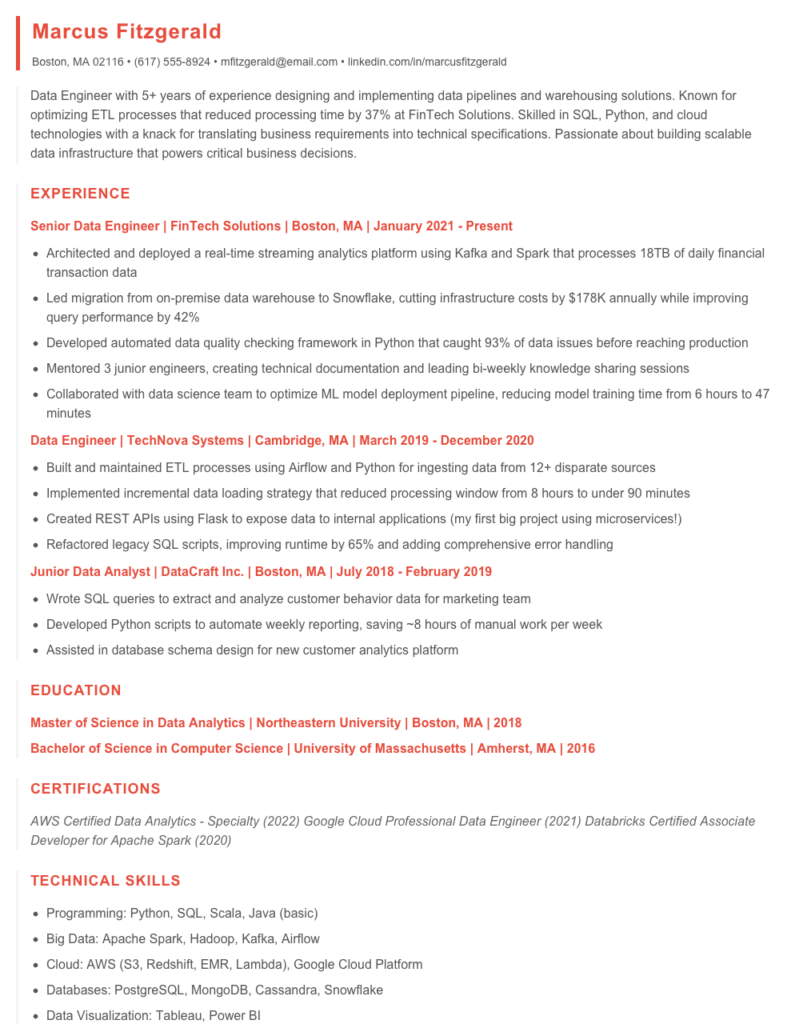

Marcus Fitzgerald

Boston, MA 02116 • (617) 555-8924 • mfitzgerald@email.com • linkedin.com/in/marcusfitzgerald

Data Engineer with 5+ years of experience designing and implementing data pipelines and warehousing solutions. Known for optimizing ETL processes that reduced processing time by 37% at FinTech Solutions. Skilled in SQL, Python, and cloud technologies with a knack for translating business requirements into technical specifications. Passionate about building scalable data infrastructure that powers critical business decisions.

EXPERIENCE

Senior Data Engineer | FinTech Solutions | Boston, MA | January 2021 – Present

- Architected and deployed a real-time streaming analytics platform using Kafka and Spark that processes 18TB of daily financial transaction data

- Led migration from on-premise data warehouse to Snowflake, cutting infrastructure costs by $178K annually while improving query performance by 42%

- Developed automated data quality checking framework in Python that caught 93% of data issues before reaching production

- Mentored 3 junior engineers, creating technical documentation and leading bi-weekly knowledge sharing sessions

- Collaborated with data science team to optimize ML model deployment pipeline, reducing model training time from 6 hours to 47 minutes

Data Engineer | TechNova Systems | Cambridge, MA | March 2019 – December 2020

- Built and maintained ETL processes using Airflow and Python for ingesting data from 12+ disparate sources

- Implemented incremental data loading strategy that reduced processing window from 8 hours to under 90 minutes

- Created REST APIs using Flask to expose data to internal applications (my first big project using microservices!)

- Refactored legacy SQL scripts, improving runtime by 65% and adding comprehensive error handling

Junior Data Analyst | DataCraft Inc. | Boston, MA | July 2018 – February 2019

- Wrote SQL queries to extract and analyze customer behavior data for marketing team

- Developed Python scripts to automate weekly reporting, saving ~8 hours of manual work per week

- Assisted in database schema design for new customer analytics platform

EDUCATION

Master of Science in Data Analytics | Northeastern University | Boston, MA | 2018

Bachelor of Science in Computer Science | University of Massachusetts | Amherst, MA | 2016

CERTIFICATIONS

AWS Certified Data Analytics – Specialty (2022)

Google Cloud Professional Data Engineer (2021)

Databricks Certified Associate Developer for Apache Spark (2020)

TECHNICAL SKILLS

- Programming: Python, SQL, Scala, Java (basic)

- Big Data: Apache Spark, Hadoop, Kafka, Airflow

- Cloud: AWS (S3, Redshift, EMR, Lambda), Google Cloud Platform

- Databases: PostgreSQL, MongoDB, Cassandra, Snowflake

- Data Visualization: Tableau, Power BI

- Version Control: Git, GitHub, BitBucket

- Container/Orchestration: Docker, Kubernetes (learning)

PROJECTS

Open-Source ETL Framework | github.com/mfitzgerald/dataflow-etl

- Created a lightweight Python ETL framework for small-to-medium data projects that’s been forked 73 times

- Implemented parallel processing capabilities resulting in 3.2x faster data transformations than sequential processing

Senior / Experienced Data Engineer Resume Example

Daniel R. Novak

Denver, CO • (720) 555-8724 • daniel.novak@email.com • linkedin.com/in/danielnovak

Seasoned Data Engineer with 8+ years building scalable data pipelines and architectures that transform business operations. Known for combining deep technical expertise with business acumen to deliver solutions that drive meaningful insights. Proven track record optimizing ETL processes, reducing infrastructure costs by 31%, and mentoring junior engineers across distributed teams.

EXPERIENCE

Senior Data Engineer | Datastream Technologies | April 2020 – Present

- Lead architecture and implementation of cloud-based data lake on AWS, processing 12TB+ daily while reducing infrastructure costs by 31%

- Designed real-time streaming pipeline using Kafka and Spark Streaming that cut data processing time from hours to minutes for 8 critical dashboards

- Migrated legacy ETL processes to modern, containerized architecture (Docker/Kubernetes), improving reliability from 86% to 99.7%

- Mentor team of 5 junior engineers; created comprehensive documentation that reduced onboarding time from 3 weeks to 6 days

- Collaborate with data science team to optimize ML model deployment, reducing model training time by 43%

Data Engineer | FinTech Solutions Inc. | June 2017 – March 2020

- Built and maintained ETL pipelines using Python, Airflow, and SQL to process 400+ million daily transactions

- Implemented data quality monitoring framework that caught 94% of anomalies before impacting downstream systems

- Created automated testing suite for data pipelines, cutting QA time in half and improving code coverage from 62% to 91%

- Reduced production bugs by 78% through implementation of CI/CD pipeline for data workflows

Data Analyst | Retail Insights Group | August 2015 – May 2017

- Transformed 6 manual reporting processes into automated data pipelines using Python and SQL

- Designed and implemented PostgreSQL database schema supporting retail analytics platform serving 45+ clients

- Built interactive dashboards with Tableau that increased client engagement by 34%

- Collaborated with engineers to improve data collection processes, reducing invalid records from 8.3% to 1.7%

EDUCATION

Master of Science, Computer Science (Data Systems Specialization)

University of Colorado, Boulder • 2015

Bachelor of Science, Mathematics (Minor in Computer Science)

University of Minnesota • 2013

CERTIFICATIONS

AWS Certified Data Analytics Specialty (2022)

Google Cloud Professional Data Engineer (2021)

Databricks Certified Developer for Apache Spark (2019)

TECHNICAL SKILLS

- Languages: Python, SQL, Scala, Java, Bash

- Big Data: Hadoop, Spark, Kafka, Hive, Presto

- Cloud: AWS (S3, EMR, Redshift, Glue), GCP (BigQuery, Dataflow, Dataproc)

- Databases: PostgreSQL, MongoDB, Cassandra, Redis

- ETL/Orchestration: Airflow, Luigi, AWS Step Functions

- Containerization: Docker, Kubernetes

- CI/CD: Jenkins, GitHub Actions, GitLab CI

- Data Visualization: Tableau, Looker, Power BI

PROJECTS

Open Source Contribution: Core contributor to PyFlume, a Python library for Flume integration (2,300+ GitHub stars)

Personal: Built a real-time air quality monitoring system using Raspberry Pi sensors, Kafka, and Grafana dashboards

How to Write a Data Engineer Resume

Introduction

Landing that dream Data Engineer job starts with a resume that showcases your technical skills, problem-solving abilities, and impact on previous organizations. Your resume isn't just a list of jobs—it's a marketing document that should convince hiring managers you can wrangle messy data into valuable insights. I've reviewed thousands of Data Engineer resumes over my career, and I've noticed the difference between ones that get callbacks and those that don't often comes down to specificity and technical relevance.

Resume Structure and Format

Keep your resume clean and scannable—just like well-structured data! Recruiters typically spend only 7.4 seconds reviewing a resume before making an initial decision.

- Stick to 1-2 pages (1 page for junior roles, 2 pages for 5+ years of experience)

- Choose a clean font like Calibri or Arial at 10-12pt size

- Use consistent formatting for section headers and job titles

- Include plenty of white space—cramped resumes get overlooked

- Save as PDF to preserve formatting (unless specifically asked for .docx)

Profile/Summary Section

Think of this 3-4 line section as your personal query—it should return exactly

what the hiring manager is looking for! Target specific skills from the job description and quantify your experience level.

Write your summary last! After drafting the rest of your resume, you'll have a clearer picture of your strongest selling points to highlight up top.

Example: "Data Engineer with 4+ years building scalable ETL pipelines and optimizing database performance. Reduced query times by 73% at FinTech Solutions by implementing columnar storage. Proficient in Python, SQL, Spark, and AWS data services with strong data modeling skills."

Professional Experience

This is where most Data Engineers fall short. Don't just list job duties—show impact! For each role:

- Start with company name, your title, and dates (month/year)

- Add 1-2 sentences describing the company/team context

- Include 4-6 bullet points highlighting achievements

- Begin bullets with strong action verbs (Engineered, Developed, Optimized)

- Quantify results where possible (improved processing time by 42%, designed architecture supporting 8TB daily ingest)

Education and Certifications

Most Data Engineer roles require at least a bachelor's degree in Computer Science, Statistics, or related field. List your education with:

- Degree, major, university name, and graduation year

- Relevant coursework (only if you're early career)

- GPA if above 3.5 and you're within 5 years of graduation

- Technical certifications with dates (AWS Certified Data Analytics, Google Cloud Professional Data Engineer, etc.)

Keywords and ATS Tips

Many companies use Applicant Tracking Systems (ATS) to filter resumes before human eyes ever see them. Beat the bots by:

- Tailoring your resume for each job application

- Including exact keywords from the job posting

- Spelling out acronyms at least once (Apache Kafka, then Kafka)

- Using standard section headings (Experience, not "Where I've Worked")

- Avoiding tables, headers/footers, and text boxes that can confuse ATS systems

Industry-specific Terms

Depending on your specific Data Engineering focus, weave in relevant technical terms:

- Data pipeline tools: Airflow, Luigi, Prefect, Dagster

- Big data technologies: Hadoop, Spark, Hive, Kafka

- Cloud platforms: AWS (Redshift, S3, Glue), GCP (BigQuery, Dataflow), Azure

- Languages: Python, Scala, Java, SQL

- Data modeling concepts: Star schema, snowflake schema, data vault

- Data storage: Data warehousing, data lakes, OLAP vs. OLTP

Common Mistakes to Avoid

I've seen these resume killers too many times:

- Being vague about technical skills ("familiar with AWS" instead of naming specific services)

- Focusing on responsibilities instead of achievements

- Listing every technology you've ever touched (focus on relevant ones)

- Including outdated or irrelevant technologies (unless the job specifically requires them)

- Writing dense paragraphs that bury your accomplishments

Before/After Example

Before: "Responsible for ETL processes and database maintenance."

After: "Engineered fault-tolerant ETL pipelines processing 13TB daily, reducing system failures by 87% and cutting processing time from 6 hours to 47 minutes using Spark and Airflow."

The difference? Specificity, metrics, and technologies that tell the hiring manager exactly what you can do for them. Remember—your resume isn't just a history of your career; it's a preview of the value you'll bring to your next employer.

Related Resume Examples

Soft skills for your Data Engineer resume

- Cross-functional communication – comfortable explaining technical concepts to non-technical stakeholders and translating business requirements into data solutions

- Project scope management – ability to push back on unrealistic timelines while suggesting practical alternatives that meet core business needs

- Team mentorship – guiding junior engineers through complex ETL processes without micromanaging their approach

- Adaptability to changing data environments – quick to learn new technologies when projects shift direction (learned dbt in 3 weeks when our team switched from Informatica)

- Proactive problem identification – spotting potential data quality issues before they impact downstream analytics

- Stress management during production incidents – maintaining clear thinking when pipelines fail during critical business periods

Hard skills for your Data Engineer resume

- Apache Spark & Hadoop ecosystem (4+ years building ETL pipelines)

- SQL database optimization & NoSQL architecture (MongoDB, Cassandra)

- Python programming with pandas, NumPy & scikit-learn libraries

- AWS cloud infrastructure (EC2, S3, Redshift, Glue)

- Data warehouse design & dimensional modeling

- Streaming data processing (Kafka, Kinesis)

- Docker containerization & Kubernetes orchestration

- Git version control & CI/CD pipeline implementation

- Databricks Certified Associate Developer